Master´s Degree in Artificial Intelligence and Big Data + 60 ECTS Credits

Índice

Boltzmann machines are a type of recurrent stochastic neural network, named after the physicist Ludwig Boltzmann. They are a powerful tool in machine learning, particularly in the realm of generative modeling. This article will delve into the fundamental concepts of Boltzmann Machines, their applications, and the algorithms that drive them.

At the heart of Boltzmann Machines lies the Boltzmann distribution. This concept, borrowed from statistical mechanics, defines the probability of a system being in a particular state as a function of the energy of that state. In the context of Boltzmann Machines, states correspond to different configurations of the network's units, and energy is determined by an energy function specific to the model.

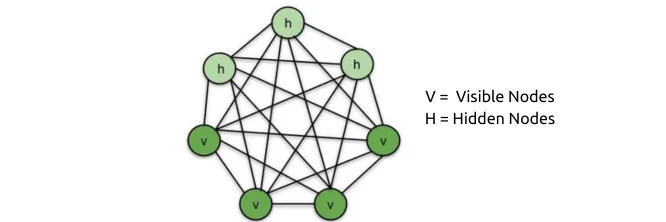

A Boltzmann Machine is composed of a set of interconnected binary units that can be either on or off. The connections between these units are symmetric and undirected. The network operates by sampling from a Boltzmann distribution over its possible configurations.

A common variant of the Boltzmann Machine is the Restricted Boltzmann Machine (RBM). In an RBM, the units are divided into visible and hidden nodes. The energy function of an RBM can be expressed as:

E = -∑(vi*wi*hj) - ∑(bi*vi) - ∑(cj*hj)

where:

vi is the state of the i-th visible unit

hj is the state of the j-th hidden unit

wi is the weight between the i-th visible unit and the j-th hidden unit

bi is the bias of the i-th visible unit

cj is the bias of the j-th hidden unit

Boltzmann Machines are versatile tools with applications in various fields, including:

Generative modeling: They can generate new data samples that resemble the training data.

Collaborative filtering: They can be used for recommending items to users.

Feature learning: They can learn meaningful representations of data.

Dimensionality reduction: They can reduce the dimensionality of data while preserving important information.

While the standard Boltzmann Machine is fully connected, several variants have been proposed to improve training efficiency and performance:

Restricted Boltzmann Machine (RBM): As mentioned earlier, RBMs have a bipartite graph structure, simplifying training.

Deep Belief Networks (DBN): DBNs are composed of multiple stacked RBMs, allowing for hierarchical feature learning.

Training a Boltzmann Machine involves finding the parameters (weights and biases) that maximize the likelihood of the training data. A common training algorithm is contrastive divergence. This algorithm approximates the gradient of the log-likelihood using samples from the model distribution and the data distribution.

Challenges and limitations

Despite their potential, Boltzmann Machines have several challenges:

Training time: Training Boltzmann Machines can be computationally expensive, especially for large models.

Local optima: The training process can get stuck in local optima, leading to suboptimal solutions.

Difficulty in sampling: Sampling from the Boltzmann distribution can be challenging, especially for complex models.

Boltzmann machines have a wide range of applications in machine learning and other fields. Some notable examples include:

Collaborative filtering: Recommending items to users based on their past preferences.

Image generation: Generating new images that are similar to the training data.

Natural language processing: Modeling the relationships between words and sentences.

Feature learning: Learning meaningful features from raw data.

Anomaly detection: Identifying unusual patterns in data.

Boltzmann machines are just one type of generative model. Other popular generative models include:

Variational Autoencoders (VAEs): VAEs are generative models that use a latent space representation to generate new data. They are often more efficient to train than Boltzmann machines.

Generative Adversarial Networks (GANs): GANs consist of a generator and a discriminator that compete with each other. They are capable of generating very high-quality data but can be difficult to train.

Each type of generative model has its own strengths and weaknesses, and the best choice for a particular application depends on the specific requirements.

There are several advanced topics related to Boltzmann machines, including:

Deep Boltzmann Machines: Deep Boltzmann machines are composed of multiple layers of hidden units, allowing them to learn more complex representations of data.

Mean-Field Variational Inference: This is a technique for approximating the posterior distribution in Boltzmann machines.

Persistent Contrastive Divergence: This is a variant of the contrastive divergence algorithm that can improve training efficiency.

Boltzmann machines are a relatively new area of research, and there are many opportunities for future development. Some potential areas of focus include:

Developing more efficient training algorithms

Exploring new applications for Boltzmann machines

Improving the interpretability of Boltzmann machines

Combining Boltzmann machines with other machine learning techniques

As research in this area continues, we can expect to see even more innovative applications of Boltzmann machines in the future.

Boltzmann Machines offer a powerful framework for probabilistic modeling and generative learning. By understanding the underlying principles of Boltzmann Machines, researchers and engineers can develop innovative solutions for a wide range of problems.

To delve deeper into Boltzmann Machines, consider the following:

Explore different variants: Investigate other types of Boltzmann Machines, such as deep Boltzmann Machines and mean-field Boltzmann Machines.

Study advanced training algorithms: Learn about techniques like persistent contrastive divergence and parallel tempering for improving training efficiency.

Apply Boltzmann Machines to real-world problems: Experiment with Boltzmann Machines on different datasets and applications to gain practical experience.

¡Muchas gracias!

Hemos recibido correctamente tus datos. En breve nos pondremos en contacto contigo.